Prior to starting a historical data migration, ensure you do the following:

- Create a project on our US or EU Cloud.

- Sign up to a paid product analytics plan on the billing page (historic imports are free but this unlocks the necessary features).

- Set the

historical_migrationoption totruewhen capturing events in the migration.

⚠️ Experimental: The following migration guide is not comprehensive and generates data that is less accurate than the data captured by PostHog. Contributions to improve it are welcome, feel free to open a PR here.

Compared to other tools, Plausible is easy to get data out of, but it is difficult to convert to PostHog's schema. This is because data exported from Plausible is aggregated, rather than the individual events that make up the aggregation (like we expect in PostHog).

This means we can try to convert data, but it won't be 100% accurate. For example, Plausible data tells there is a certain number of visitors, pageviews, and visits, but it doesn't tell you which user did what event at what time. We need break down this data to generate individual events that create an equivalent aggregation.

This guide aims to convert aggregate data into events where possible and capture them in PostHog.

Terminology differences: Although pageviews, custom events, and many properties are the same between Plausible and PostHog, there are two major terminology differences:

1. Export data from Plausible

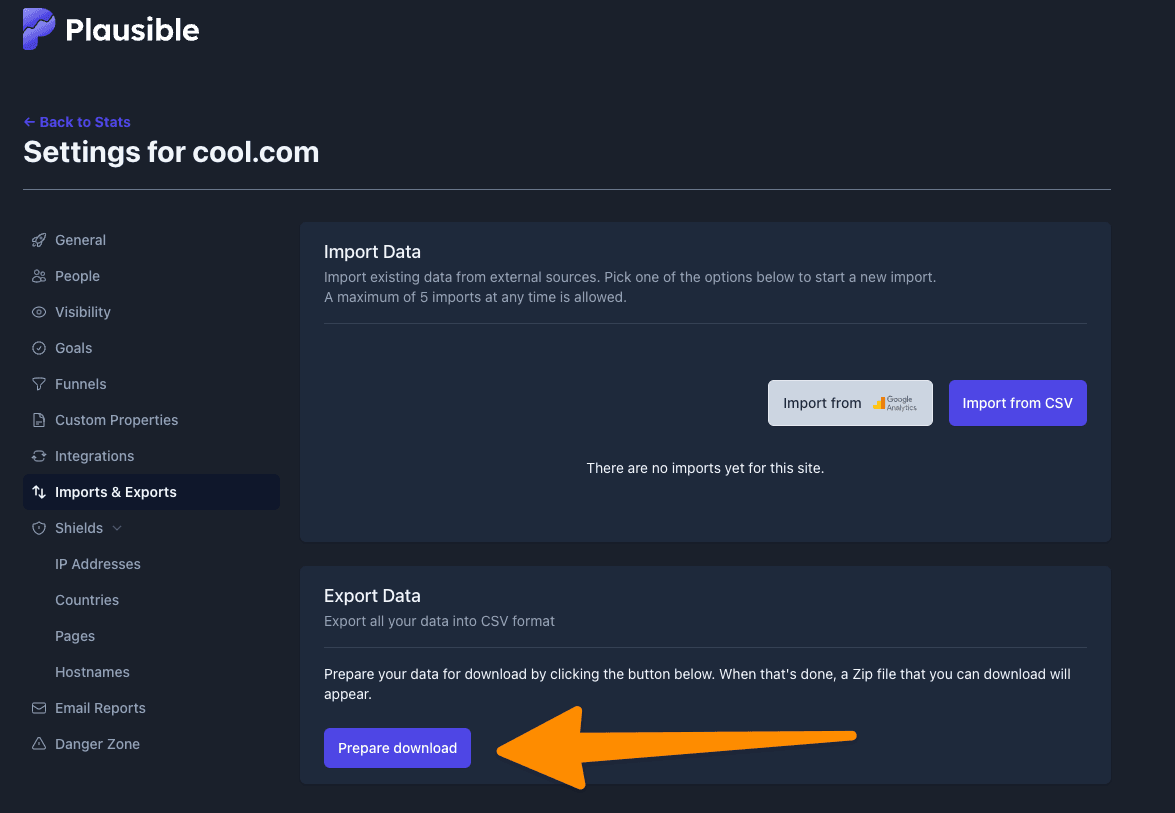

To export data from Plausible, go to your site settings and click on the Imports & Exports tab. Once here, click Prepare download and download the collection of csv files once ready.

2. Convert Plausible data and capture it in PostHog

🛠️ Work in progress: The following script converts and capture visitors and pageviews, but is not comprehensive. For example, it doesn't include:

- Person or event properties like UTMs or channels

- Session data (generating

$session_idand correct timing for session duration)- Bounce rate

- Custom events

- Entry or exit pages

Once you have your exported data, you can run the following script to convert it to PostHog's schema and capture pageviews. This script randomly generates $distinct_id values for each visitor and randomly assigns them pageviews. It requires the names and locations of your exported data as well as your Plausible Site Timezone which you can find in your site settings.

Once you've run the script successfully, you should see data in PostHog. You can then continue your PostHog setup by installing the snippet or a library.